Ryan: We've been working on a new game the past couple of months and this is the first time we're going to be talking about it. You can find out more about the game by watching the video above, and then geek out over the AI by reading Marnielle's post below.

I’m excited that we’re making a builder type of game in the likes of Prison Architect Banished, and Rimworld. I love playing such games. Our’s is a school management game where you can design classrooms, offices, hire teachers, design curriculum, and guide students to their educational success.

For every new game, it’s always my aim to try to implement a new algorithm or system and learn something new. I’ve always been fascinated with an AI planning system called Goal Oriented Action Planning or GOAP. If you’re not familiar with it, here’s a simple tutorial. I haven’t developed such system myself as the games that I’ve made so far have no use for it. I think it’s the perfect AI system for builder games. I hope I’m right!

Why GOAP?

The primary reason is I’m lazy. I don’t want to wire and connect stuff like you do with Finite State Machines and Behaviour Trees. I just want to provide a new action and my agents will use it when needed. Another main reason is I’ve reckoned that there’s going to be a lot of action order combinations in the game. I don’t want to enumerate all of those combinations. I want the game agents to just discover them and surprise the player.

Another important reason is the AI system itself is an aide for development. There’s going to be lots of objects in the game that the agents may interact with. While I’m adding them one by one, I’ll just add the actions that can be done with the object and the agents will do the rest. I don’t have to reconfigure them much every time there’s a new action available. Just add the action and it’s done.

Tweaking The System

While making the system, I had some ideas that would make the generic GOAP system better. They sure have paid off.

Multiple Sequenced Actions

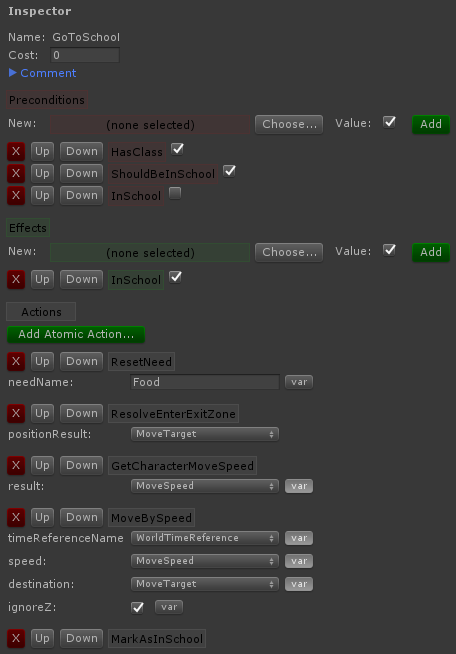

Per GOAP action, instead of doing only one action, our custom GOAP action contains a set of modular atomic actions. Each atomic action is executed in sequence. This is what it looks like in editor:

By doing it this way, I can make reusable atomic actions that can be used by any agent. A GOAP action then is just a named object that contains preconditions, effects, and a set of atomic actions.

GoapResult

I incorporated the concept of action results like how it is in Behaviour Trees. An atomic action execution returns either SUCCESS, FAILED, or RUNNING. This is what the atomic action base class looks like:

public abstract class GoapAtomAction {

public virtual void ResetForPlanning(GoapAgent agent) {

}

public virtual bool CanExecute(GoapAgent agent) {

return true;

}

public virtual GoapResult Start(GoapAgent agent) {

return GoapResult.SUCCESS;

}

public virtual GoapResult Update(GoapAgent agent) {

return GoapResult.SUCCESS;

}

public virtual void OnFail(GoapAgent agent) {

}

}When an atom action returns FAILED, the whole current plan fails and the agent will plan again. A RUNNING result means that the current action is still running, thus also means that the current plan is still ongoing. A SUCCESS result means that the action has done its execution and can proceed to the next atomic action. When all of the atomic actions returned SUCCESS, the whole GOAP action is a success and the next GOAP action in the plan will be executed.

This concept makes it easy for me to add failure conditions while an action is being executed. Whenever one action fails, the agent automatically replans and proceeds to execute its new set of actions.

Condition Resolver

Condition Resolvers are objects that can query current world conditions which you need during planning. I implemented this as another base class in our system. The concrete classes can then be selectable in the editor. This is what the base class looks like:

public abstract class ConditionResolver {

private bool resolved;

private bool conditionMet;

public ConditionResolver() {

Reset();

}

public void Reset() {

this.resolved = false;

this.conditionMet = false;

}

public bool IsMet(GoapAgent agent) {

if(!this.resolved) {

// Not yet resolved

this.conditionMet = Resolve(agent);

this.resolved = true;

}

return this.conditionMet;

}

protected abstract bool Resolve(GoapAgent agent);

}Note here that it has logic such that Resolve() will only be invoked once. Concrete subclasses need to only override this method. Such method may execute complex calculations so we need to make sure that it’s only called once when needed during planning.

This is what it looks like in editor:

All conditions default to false unless they have a resolver which is used to query the actual state of the condition.

Usage

Once the conditions, resolvers, and actions have been set up, all that’s left to do is to add goal conditions and invoke Replan().

void Start() {

this.agent = GetComponent();

Assertion.AssertNotNull(this.agent);

// Start the AI

this.agent.ClearGoals();

this.agent.AddGoal("StudentBehaviour", true);

this.agent.Replan();

}If there are new goals to satisfy, the same calls can be invoked to change the goal(s) for a new plan to be executed.

So Far So Good

Our custom GOAP system is working well for us… for now. I now have working worker agents and student agents. More will be added later on, including cooks, janitors etc. Here’s hoping that we don’t need to revamp the system as we’re already so deep with it.

Thanks for reading! If you'd like to be updated on the latest Squeaky Wheel news, please sign up for our mailing list, join our Facebook group, follow us on Twitter, or subscribe to our Youtube channel! Please let us know if this is something you would be interested in supporting via Early Access! Any feedback on that would be most appreciated!